Sunday, 29 April 2012

Tuesday, 27 March 2012

Video Raw Color Formats

Various video raw color formats are available in use. Depending on the application we need to select one of the format. Majorily, two color format type which are in popular use are RGB and YUV formats.

All the color information can be obtained as a mix of Red, Green and Blue components. Most of the devices while capturing from real world in anlog domain will be in RGB formats. Modern digital cameras will be capturing the raw format in 36 or 48 bits per pixel in which each R,G and B are of either 12-bit or 16-bits each. Since storing directly in this format will take lot of memory for storage and processing time to use it further, in this raw format generally only 8-bits per each R,G and B are only used before converting to the compressed format.

The YUV format is useful to process the luminance and color information seperately. Since human eye cannot detect color change that accurately compared to luminance information. The color information can be processed with even with less number of bits per pixel, which will be useful in a memory constraint systems.

The formats in either RGB or YUV are named based on

1.) how much memory each color formats(1-bit per pixel(bpp) to 32-bpp).

2.) how each either R,G,B or Y,U,V are placed with respect to each other. These placement has names like Planar, Interleaved, SemiPlanar.

Planar format: In this format first complete (width and height information) either R/G/B in case of RGB formats or Y/U/V in case of YUV formats are placed one after the other.

Interleaved format: In this format either 1/2/4 pixel information is placed together.

SemiPlanar: This is generally used for YUV formats. Y information is in planar format and then UV information is in interleaved format.

Gray2,Gray4,Gray16,Gray256(Monochrome):

Each pixel information will have only luma information,chroma information will be ignored. Each pixel is represented by 1,2,4,8 bits respectively from the luma informatoin for the formats Gray2,Gray4,Gray16 and Gray256 respectively. These formats are also called as GrayN pixel formats, Bits represetns grey level, with 0 being black and N-1 being white.

RGB Formats: These formats will be generally in interleaved format unless specified explicitly. Below figure shows how RGB values arranged in both planar and interleaved formats.

RGB12:

Pixels are stored in 1.5 bytes, little-endian with the 12-bit comprising 4bits of red,4bits of green and 4 bits of blue, consistuting 12-bpp.

Color4K:

Pixels are stored in two bytes, little-endian, with the 16 bit word comprising 4bits of red, 4bits of green, 4bits of blue and the most significant 4 bits unused. The red bits occupy the least significant bits of the most significant byte and the blue bits occupy the least significant bits of the least significant byte. When separated into individual bytes, each component is operated on to form a true-clor mapping.( i.e multiply each component by 17.)

RGB16/Color64K:

Pixels are stored in two bytes, little-endian, with the 16 bit word(16bpp) comprising 5bits for red, 6 bits for green and 5 bits for blue. The red bits occupy the most significant bits and the blue bits occupy the least significant bits. when seperated into individual bytes, each component is operated on to form a true-color mapping. (ie multiply red and blue by 255/31 and green by 255/63.)

RGB888/RGB24:

Pixels are store in three bytes, one for each of red, green and blue. The blue,green and red bytes can be stored in that order or in the reverse order.

Color16M:

Pixels are store in three bytes, one for each of red, green and blue. The blue byte is stored first and the red byte last.

Color16MA,Color16MU,(32-bit):

Pixels are store in four bytes, one for each of red, green,blue and one byte for either unused or alpha channel. The blue byte is stored first, green byte next and the alpha channel byte last. The alpha channel is used to determine the transparency.

Palette versions of Color16 and Color256.

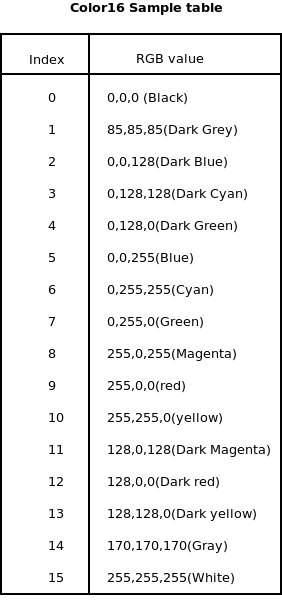

Color16 pixel Format:

Pixels are stored in four bits, little-endian(first pixel in least significant bits). Each value maps onto a value in the color palette. This layout enables quick inversion of a color by inverting its index. If there is no color palette then the following fixed palette is assumed.

Color 256 pixel Format:

Pixels are stored in one byte. Each value maps onto a value in the color palette. If there is no color palette then a fixed palette is assumed. This consists of colors whose component values are multiples of 0x33,(6x6x6= 216 colors) pure primaries with one component set to multiple of 0x11 ( not including multiples of 0x33), (10 intensities x3 primaries =30 colors) and pure gray shades with all components set to a multiple of 0x11(not including multiples of 0x33),(10intensities=10colors). This makes a total of 216+30+10=256 colors. Half the 0x33 multiplies occupy the lower 108 values(0-107) and the other half occupy the upper 106(148-255). The darker reds come next(108-112), followed bye the darker greens(113-117) and blues(118-122), then all the grays(123-132), then the lighter blues ( 133-137), ligther greens(138-142) and then the lighter reds(143-147). This layout enables quick inversion of color by inverting its index. Example values are:

YUV Color formats:

YUV420P:

Pixels are stored in planar format and the color information is sub sampled both in horizontal and vertical direction. Y11,Y12,Y21,Y22 be the first two luminance bytes in first and second row respectively. These four pixels will have 1byte of U store after all the luminance bytes are stored for the entire image and one byte of V store after all Y and U information is stored for the entire image. A pixel is stored in 12-bits in this format.

YUV420I:

This format is similar to the 420P except that the information will be stored in interleaved format instead of planar format.

YUV420SP(NV21):

In this format Y is stored in planar which is of size Width*Height bytes and U and V are stored in interleaved format which constitutes (Width/2)*(Height/2)*2 bytes.

YUV422VP:

The color information is sub-sampled in vertical direction and this information is stored in planar format. The same color information is used for two pixels in the vertical direction. A pixel information will be stored in 2 bytes, 16bpp.

YUV422HP:

Most commonly called as 422P in which the color information is sub-sampled in horizontal direction and this information is stored in planar format. The same color information is used for two pixels in the horizontal direction. A pixel information will be stored in 2 bytes, 16bpp.

YUV422VI and YUV422HI:

These formats are similar to 422VP and 422HP respectively except that now the information will be stored in interleaved format. Most popularly used formats in YUV422HI are UYVY and YYUV formats. As the name suggests that the chroma and luma information is interleaved in that order.

YUV444P:

Here in this format the information Y, U and V are stored in planar format without any sub-sampling in color. So this format will take 3 bytes space for each pixel to be store, ie 24-bpp.

YUV444I:

Similar to YUV444P except Y,U and V are stored in interleaved format, takes 24-bpp for storage.

Dithering:

Dithering is used to improve the visual quality of the image by adding random noise to the quantized values. Dithering effect will be prominent when the quantization is more. In the above discussed formats the dithering will have effect for Gray2,Gray4,Gray16, RGB12,RGB16, Color16,Color256 color formats, as these format are using only quantized values for either gray or color formats.

Wednesday, 29 February 2012

Android StageFright MediaFramework Playback

During Android device bootup processes present in init.rc will be loaded. adb, ril, zygote, mediaserver,

Zygote helps in reducing the loading time of applications.Since all the core libraries are loaded and exist in a single place which are generally readonly can be forked from this place itself reducing the time for loading. Only some specific libraries needed to be loaded for any specific task since all the dependent core/common libraries are already loaded by Zygote process.

mediaserver is useful in handling all media related operations and this is also loaded during boot up time. Even if we kill the mediaserver process it will be created again. mediaserver process will instantiate services like AudioFlinger,

First lets understand what happens during the device boot up time for the media part. Below figure shows few important calls.

DEVICE BOOTUP:

Now let see how the playback sequence happens in the Android stagefright mediaframework. Below figures depict the important functions flow in the stagefright framework.

To concentrate on only the playback part, lets consider a file browser application, to avoid thumbnail and metadata complications. When we select a file for playback new mediaplayer is created with fd. The main calls from application to middleware are "setDataSource","prepare","prepareAsync","_start","_stop". Below explained the flow for "setDataSource","prepare","prepareAsync","_start".

SET DATA SOURCE:

PREPARE / PREPARE ASYNC:

START:

Thursday, 2 February 2012

Technical Links

Android supported media formats:

http://developer.android.com/guide/appendix/media-formats.html

Android Binder Stuff:

http:/

http:/

LG OpenSource:

http:/

Android Recording Path:

http:/

http:/

http:/

JNI Stuff:

http:/

http:/

Finding out Memory Leaks in Android:

http://freepine.blogspot.in/2010/02/analyze-memory-leak-of-android-native.html

OpenGL Stuff:

http://www.eclipse.org/articles/Article-SWT-OpenGL/opengl.html

http://timelessname.com/jogl/lesson01/

http:/

SIP Stuff:

http:/

Audio Mixing:

http:/

RTSP Stuff:

http:/

http://developer.android.com/guide/appendix/media-formats.html

Android Binder Stuff:

http:/

http:/

LG OpenSource:

http:/

Android Recording Path:

http:/

http:/

http:/

JNI Stuff:

http:/

http:/

Finding out Memory Leaks in Android:

http://freepine.blogspot.in/2010/02/analyze-memory-leak-of-android-native.html

OpenGL Stuff:

http://www.eclipse.org/articles/Article-SWT-OpenGL/opengl.html

http://timelessname.com/jogl/lesson01/

http:/

SIP Stuff:

http:/

Audio Mixing:

http:/

RTSP Stuff:

http:/

Subscribe to:

Comments (Atom)